Disclaimer: Anything to do with ipfire; I could absolutely be wrong, confused, or incorrect…

I made some changes to the makeqosscripts.pl:

my @cake_options = (

# RED is by default connected to the Internet

- "internet"

+ "internet diffserv4 wash ack-filter raw"

..

- print "\ttc qdisc add dev $qossettings{'DEVICE'} parent 1:$qossettings{'CLASS'} handle $qossettings{'CLASS'}: cake @cake_options\n";

+ print "\ttc qdisc add dev $qossettings{'DEVICE'} parent 1:$qossettings{'CLASS'} handle $qossettings{'CLASS'}: cake docsis nat egress overhead 20 @cake_options\n";

..

- print "\ttc qdisc add dev $qossettings{'DEVICE'} parent 2:$qossettings{'CLASS'} handle $qossettings{'CLASS'}: cake @cake_options\n";

+ print "\ttc qdisc add dev $qossettings{'DEVICE'} parent 2:$qossettings{'CLASS'} handle $qossettings{'CLASS'}: cake ethernet ingress overhead 20 @cake_options\n";

Which then returns this:

tc qd show

qdisc noqueue 0: dev lo root refcnt 2

qdisc htb 1: dev red0 root refcnt 5 r2q 10 default 0x110 direct_packets_stat 1 direct_qlen 1000

qdisc cake 120: dev red0 parent 1:120 bandwidth unlimited diffserv4 triple-isolate nat wash ack-filter split-gso rtt 100ms raw overhead 18 mpu 64

qdisc cake 102: dev red0 parent 1:102 bandwidth unlimited diffserv4 triple-isolate nat wash ack-filter split-gso rtt 100ms raw overhead 18 mpu 64

qdisc cake 104: dev red0 parent 1:104 bandwidth unlimited diffserv4 triple-isolate nat wash ack-filter split-gso rtt 100ms raw overhead 18 mpu 64

qdisc cake 110: dev red0 parent 1:110 bandwidth unlimited diffserv4 triple-isolate nat wash ack-filter split-gso rtt 100ms raw overhead 18 mpu 64

qdisc cake 101: dev red0 parent 1:101 bandwidth unlimited diffserv4 triple-isolate nat wash ack-filter split-gso rtt 100ms raw overhead 18 mpu 64

qdisc cake 103: dev red0 parent 1:103 bandwidth unlimited diffserv4 triple-isolate nat wash ack-filter split-gso rtt 100ms raw overhead 18 mpu 64

qdisc ingress ffff: dev red0 parent ffff:fff1 ----------------

qdisc fq_codel 8002: dev green0 root refcnt 5 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

qdisc htb 2: dev imq0 root refcnt 2 r2q 10 default 0x210 direct_packets_stat 0 direct_qlen 32

qdisc cake 203: dev imq0 parent 2:203 bandwidth unlimited diffserv4 triple-isolate nonat wash ingress ack-filter split-gso rtt 100ms raw overhead 18 mpu 64

qdisc cake 220: dev imq0 parent 2:220 bandwidth unlimited diffserv4 triple-isolate nonat wash ingress ack-filter split-gso rtt 100ms raw overhead 18 mpu 64

qdisc cake 200: dev imq0 parent 2:200 bandwidth unlimited diffserv4 triple-isolate nonat wash ingress ack-filter split-gso rtt 100ms raw overhead 18 mpu 64

qdisc cake 204: dev imq0 parent 2:204 bandwidth unlimited diffserv4 triple-isolate nonat wash ingress ack-filter split-gso rtt 100ms raw overhead 18 mpu 64

qdisc cake 210: dev imq0 parent 2:210 bandwidth unlimited diffserv4 triple-isolate nonat wash ingress ack-filter split-gso rtt 100ms raw overhead 18 mpu 64

confused by the fq_codel (still) on the green0 link…

confused with what imq0 is attached to… (I understand it itself is virtual, but what physically loads its queue?)

htb 1 is attached to red0 (makes sense)

htb 2 is attached to imq0 (looking at -github.com/imq/linuximq/wiki/UsingIMQ) I don’t see any rules for green to imq (iptables -L -v -n)

(at the end of this… I found this seems to make a difference…)

tc qd replace dev green0 root cake diffserv4 ack-filter

but if I can’t prove that I don’t really know if I’m doing something that makes a difference…

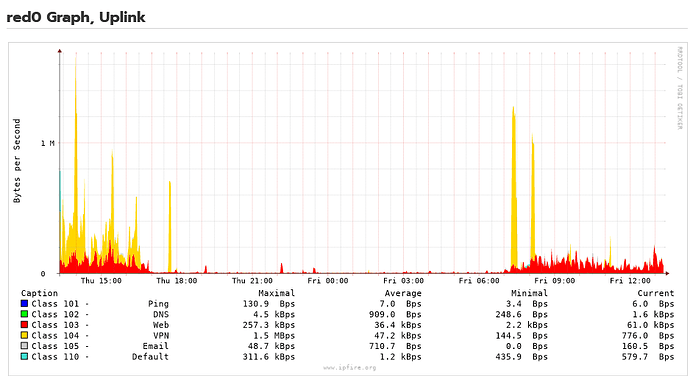

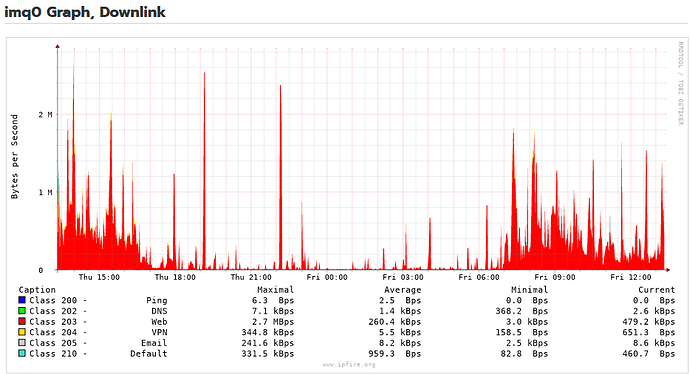

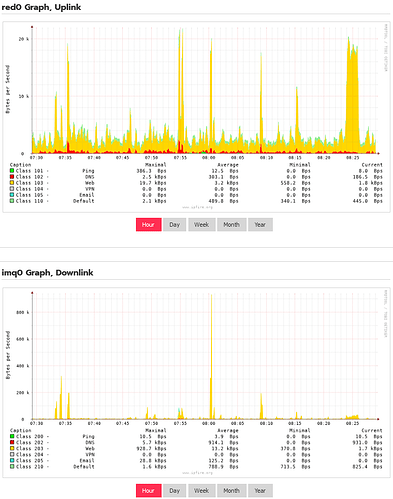

I’m a 300/30 docsis

I am new to ipfire, running since 187… I wanted to see how cake’s ack filtering and an imq ack prioritization looks like… but the 101 class says it’s icmp (?)

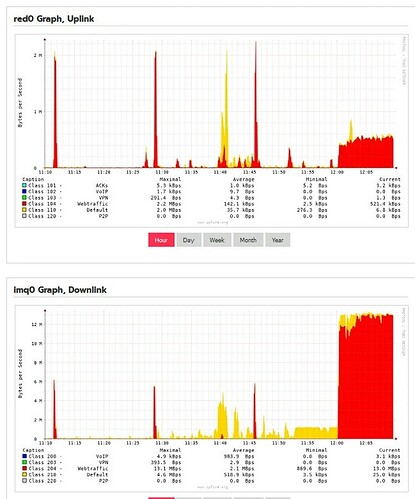

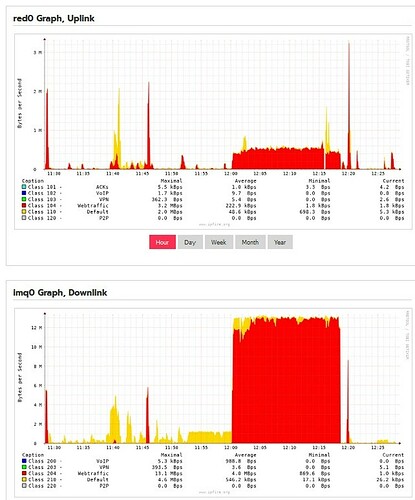

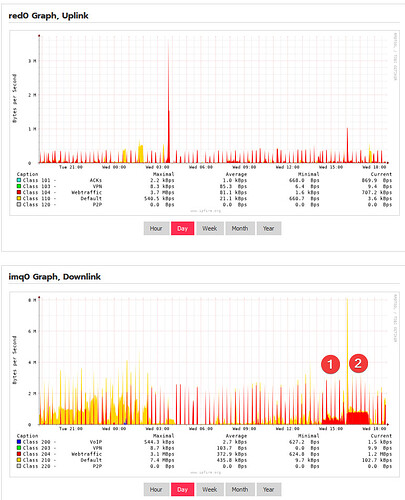

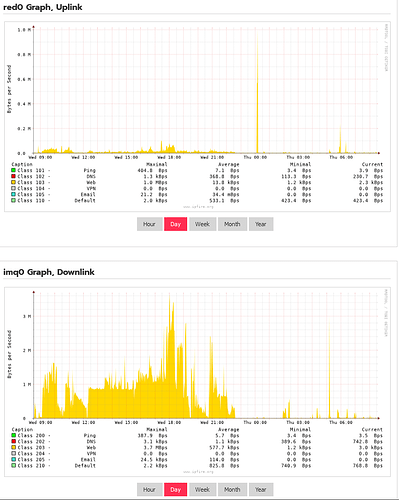

I don’t know how other people’s imq graphs look… here’s mine

-imgur.com/a/ukixlbJ

the big change is when I removed ack-filter from cake… (I think…)

-imgur.com/a/ukixlbJ

added ack-filter back in…

I also have tailscale running in the house which is the exit node for three devices…

I am trying to get the most of the link (obviously)… no one says their interweb is too fast…

Open to suggestions, criticism, corrections…

Thank you in advance.