Hello,

LVM doesn’t work anymore since today’s update, no lvs are displayed and the commands don’t work either. Was there a change?

I see changes in CU 176 but not in CU 177:

https://git.ipfire.org/?p=ipfire-2.x.git;a=log;h=refs/heads/core177

(then do a browser search for LVM)

Please add more details about the issues

Good day,

Thank you for your help.

After yesterday’s update and a restart, it no longer shows me any LVs.

lvm version

LVM version: 2.03.21(2) (2023-04-21)

Library version: 1.02.195 (2023-04-21)

Driver version: 4.47.0

Configuration: ./configure --prefix=/usr --with-usrlibdir=/usr/lib --enable-pkgconfig --with-udevdir=/lib/udev/rules.d --with-default-locking-dir=/run/lvm --enable-udev_rules --enable-udev_sync

[root@rosa ~]# lvscan

[root@rosa ~]# lvdisplay

[root@pink ~]#

The hard drives are all there.

What can be the reason?

Hi Fritz,

there are too many reasons without an error log.

Have you checked if the LVM can be detected and is working when you boot another distribution via a bootable image (e.g. gparted)? But be aware to not install anything, otherwise your data will be lost.

Hello,

When I boot a LIVE system, the LVMs work just like they did before the update on the IPFIRE.

How can I check if LVM is properly installed and running?

Is LVM implemented as a normal service or kernel-side?

# /etc/rc.d/init.d/lvm2 start

-su: /etc/rc.d/init.d/lvm2: No such file or directory

Thank you for your help.

The lvm2 binary is called lvm and is in /usr/sbin/

If you run lvm version you should get the following, which I got on my CU177 system (I don’t use lvm).

lvm version

LVM version: 2.03.21(2) (2023-04-21)

Library version: 1.02.195 (2023-04-21)

Driver version: 4.47.0

Configuration: ./configure --prefix=/usr --with-usrlibdir=/usr/lib --enable-pkgconfig --with-udevdir=/lib/udev/rules.d --with-default-locking-dir=/run/lvm --enable-udev_rules --enable-udev_sync

You should also check that the udev rule is present as on my system because that should trigger the lvm available volumes to be mounted automatically.

ls -hal /lib/udev/rules.d/69-dm-lvm.rules

-r–r–r-- 1 root root 3.1K Jul 10 20:40 /lib/udev/rules.d/69-dm-lvm.rules

Daniel Weismueller flagged up the problem with lvm in CU175 that was fixed in CU176 so if there is a problem in CU177 I would expect that he will also flag it up in ythe IPFire bugzilla.

You can test that the volumes can be seen by running lvs which should list all available lvm volumes.

Is there any mention of lvm actions in the bootlog.

ls -hal /lib/udev/rules.d/69-dm-lvm.rules

-r--r--r-- 1 root root 3.1K Jul 10 20:40 /lib/udev/rules.d/69-dm-lvm.rules

“lvs” goes through without errors but no LVs are listed…?

The LVM version is just like yours.

# lvm version

LVM version: 2.03.21(2) (2023-04-21)

Library version: 1.02.195 (2023-04-21)

Driver version: 4.47.0

Configuration: ./configure --prefix=/usr --with-usrlibdir=/usr/lib --enable-pkgconfig --with-udevdir=/lib/udev/rules.d --with-default-locking-dir=/run/lvm --enable-udev_rules --enable-udev_sync

So lvs shows nothing!

What about vgs and pvs, do they show anything?

This is exactly the same it doesn’t show anything:

# pvs

pvs pvscan

[root@fire ~]# pvscan

No matching physical volumes found

vgscan

[root@fire ~]#

Is LVM also started via “/etc/rc.d/init.d/”?

But they exist. There are 6 hard drives installed that are set up with LVM.?

I don’t use lvm on my production machine and don’t have it set up on my vm testbed.

I will look at setting up lvm on a vm IPFire with CU176 and then test upgrading to CU177.

This will take a bit of time to get setup and evaluated. Hopefully I can have something by later tomorrow.

In the meantime maybe another IPFire forum member, who uses LVM, can report if they have also had problems with LVM no longer working after upgrading to CU177.

No.

There is a udev rule that I mentioned earlier. This detects if there are any logical volumes and mounts them during the boot process but if pvscan etc are not showing anything then that udev rule won’t work because it uses those lvm commands to get the info on the physical volumes/volume groups/logical volumes to end up with a list of devices that should be activated.

Do you see the drives with “fdisk -l”?

And what kind of drives are these?

Yes, with “fdisk -l” I can see all disks.

What do you mean by drives.

They are all 3.5" HDDS from 2TB -16TB

Can you do “lvmdiskscan” both on IPFire and the live system?

Yes, that works on both.

“lvmdiskscan”

# lvmdiskscan

/dev/ram0 [ 16.00 MiB]

/dev/ram1 [ 16.00 MiB]

/dev/sda1 [ 64.00 MiB]

/dev/ram2 [ 16.00 MiB]

/dev/sda2 [ 1.00 GiB]

/dev/ram3 [ 16.00 MiB]

/dev/sda3 [ 9.87 GiB]

/dev/ram4 [ 16.00 MiB]

/dev/sda4 [ <227.54 GiB]

/dev/ram5 [ 16.00 MiB]

/dev/ram6 [ 16.00 MiB]

/dev/ram7 [ 16.00 MiB]

/dev/ram8 [ 16.00 MiB]

/dev/ram9 [ 16.00 MiB]

/dev/ram10 [ 16.00 MiB]

/dev/ram11 [ 16.00 MiB]

/dev/ram12 [ 16.00 MiB]

/dev/ram13 [ 16.00 MiB]

/dev/ram14 [ 16.00 MiB]

/dev/ram15 [ 16.00 MiB]

/dev/sdb [ <3.64 TiB]

/dev/sdc [ <5.46 TiB]

/dev/sdd [ <3.64 TiB]

/dev/sde1 [ <3.64 TiB]

/dev/sdf1 [ 10.91 TiB]

3 disks

22 partitions

0 LVM physical volume whole disks

0 LVM physical volumes

When I try to open a non-LVM disk with cryptsetup.

“cryptsetup luksOpen /dev/sdx hdd”

cryptsetup: error while loading shared libraries: libssl.so.1.1: cannot open shared object file: No such file or directory

Either you never had logical volumes or they went missing.

Maybe these threads are helpful:

I had thought of Device Mapper with LVM that something is wrong there.

LVs are not gone, only in core177 is there something different.?

I have to see that I separate this memory from the Fire anyway. But that’s not possible at the moment.

That must be getting tried on a non IPFire system as IPFire does not have luks or cryptsetup.

Regarding the LVM volumes on your IPFire.

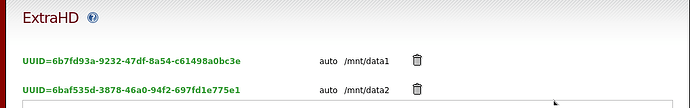

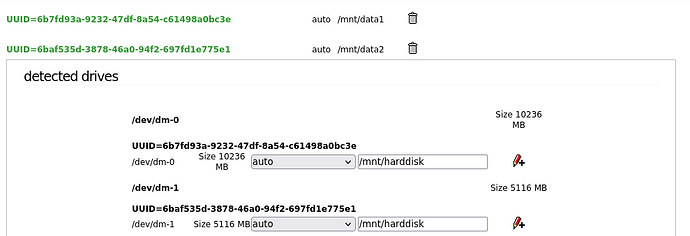

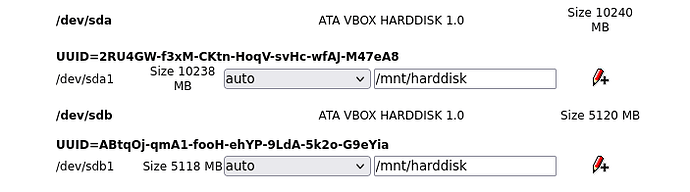

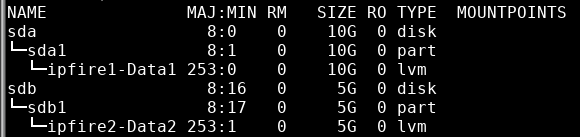

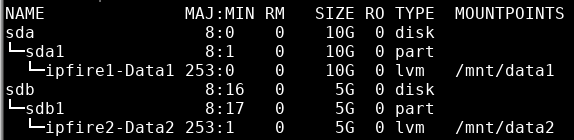

I created a vm IPFire system with Core Update 176 and added two hard drives to the system created with lvm. I created the PV’s then VG’s (ipfire1 and ipfire2) followed by a LV on each VG (data1 and data2).

I then formatted the two LV’s with ext4 and then mounted them on IPFire using the Extra HD menu option.

I copied some files to each of the hard disks.

I rebooted the system and both drives were available mounted.

I removed the 69-dm-lvm.rules file and rebooted and the LV’s did not get mounted when rebooting. The two mounts showed in red instead of green.

I then remounted the LV’s again and again rebooted and everything worked fine.

Then I upgraded the IPFire vm from CU176 to CU177. Before rebooting I checked the Extra HD page and the two drives were present (also tested by lsblk via the command line)

Then rebooted and the two drives were present again and the files can be accessed.

So I have not been able to reproduce what you have described.

Does your Extra HD page show the lvm drives in the detected drives section as shown in mine?

Looking in /dev/mapper I can see the two LVM drives still show present after the CU177 update.

Running lsblk the two LV’s show up, even when they failed to mount when I removed the udev rules file.

Remounting the drives from the Extra HD page resulted in the same LV’s now showing the mount points.

What does your IPFire system show with the commands lsblk and ls -hal /dev/mapper

Also on the Extra HD page, as well as the LV’s (dm-0 and dm-1) the raw drives are also shown (sda and sdb).

Do you see the raw drives in your Extra HD page for each of the 6 drives.

I’ll test the commands again later, so far they didn’t show anything and then I’ll get back to you, I’ll start again.

The device mapper is related to LVM.

There are only 8% free spaces on the “boot” partition, could this be the reason?

Thank you for your detailed test!

I wouldn’t have thought so. It sounds like your IPFire is booting so the space, although small, was enough for booting.

I am presuming that you have not defined the mountpoints for the drives in the boot partition but in the root partition.

At 8%, it would be good to plan on doing a fresh install, which will provide a larger boot partition , and then do a restore from the backup.