I did one of these posts many years ago in 2015. The internet speeds back then were much slower. The line I presented back then was 10Mbps/2Mbps. ![]() Today I wanted to post my current home setup. The ISP says this is a 250/25 connection. In reality, actual speeds are closer to 300/30 when the network is quiet. Sometimes even faster. Note the downlink (22ms) and uplink (18ms) Pings are higher than idle (12ms) ping.

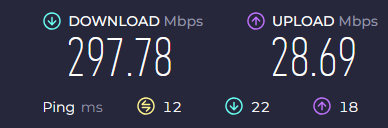

Today I wanted to post my current home setup. The ISP says this is a 250/25 connection. In reality, actual speeds are closer to 300/30 when the network is quiet. Sometimes even faster. Note the downlink (22ms) and uplink (18ms) Pings are higher than idle (12ms) ping.

I’m going to discuss a couple of things that break the standard rules for QoS (such as the rule to reduce your ISP speeds by 3%). This, in part, is due to the firewall cpu’s power or lack thereof, as well as normal variation of internet connections.

First, when you set your ISP speeds at the top of the QoS page, behind the scenes there is a 10% reduction which happens automatically in the settings file. If your network and buffers can handle it, you can “overclock” these numbers so that after a 10% reduction, your speeds are where you want them. The settings file is in /var/ipfire/qos. Here is the relevant bit from my file:

INC_SPD=350000 <-what you input in the QoS page

OUT_SPD=30000 <-what you input in the QoS page

DEF_INC_SPD=315000 <-this is generated automatically in this file

DEF_OUT_SPD=27000 <-this is generated automatically in this file

You can see that my inputted values of 350000 and 30000 are already reduced to 315000/27000. In reality, as long as these numbers are not lower than any of your fastest class speeds, you can make them as high as you want. Mine end up at 315/27.

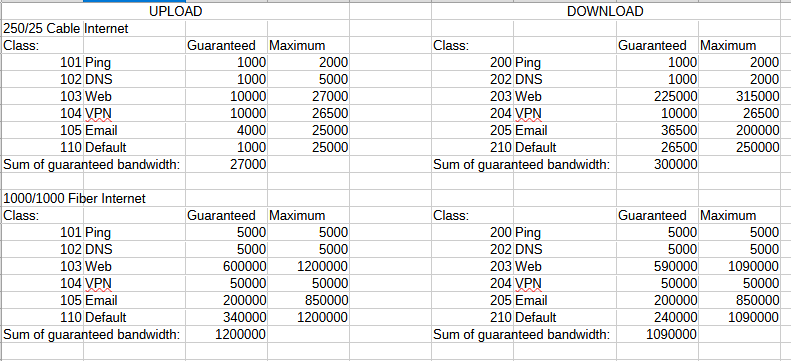

Now, when you start adjusting the Guaranteed and Maximum bandwidths in each of your classes, this is where you want to make sure you do not exceed any buffers. Here is what my classes file at /var/ipfire/qos looks like:

imq0;200;1;1000;2000;;;;Ping;

imq0;202;2;1000;2000;;;;DNS;

imq0;203;3;225000;300000;;;;Web;

imq0;204;4;10000;26500;;;;VPN;

imq0;205;5;36500;200000;;;;Email;

imq0;210;6;26500;250000;;;;Default;

red0;101;1;1000;2000;;;;Ping;

red0;102;2;1000;5000;;;;DNS;

red0;103;3;10000;27000;;;;Web;

red0;104;4;10000;26500;;;;VPN;

red0;105;5;4000;25000;;;;Email;

red0;110;6;1000;25000;;;;Default;

A couple of things to note:

-make sure that the sum of your guaranteed bandwidths in each class does not exceed your maximum bandwidth. You can see in the Classes file above that the sum of my downlink guaranteed bandwidths is 300000 and the sum of the guaranteed uplink bandwidths is 27000. Classes 103/203 (Web) have maximum bandwidths set to 27000/300000 respectively. Bingo!

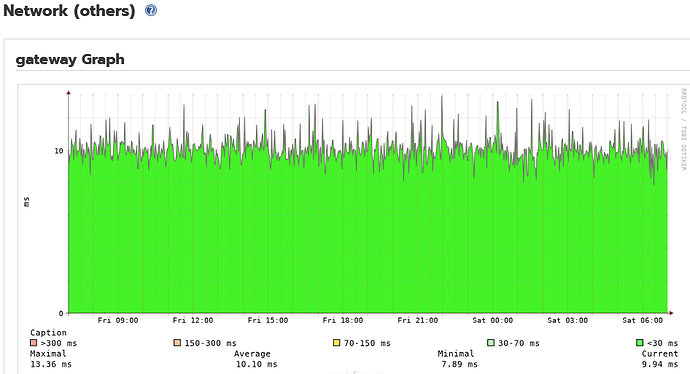

-use a bufferbloat testing site to fine-tune these values. When you get the best combination of speeds and low latency, save it and run it awhile. Test again during peak network busyness to see if latency remains low. Also look at the gateway graph to see if spikes in gateway latency are reduced or eliminated. This is a good indication that QoS is set up well.

Regarding the CPU in your IPFire, if it is too slow, it won’t be able to keep up with the speeds you set. Most CPUs should be able to maintain at least 100Mbps speeds with QoS enabled. If your internet speeds are higher than that, then you are more likely to have computational slowdowns. The only way to know for sure is trial and error. For example, I tested an Intel J3160 cpu and it had trouble maintaining speeds as I increased the maximal QoS downlink speeds. What you can try in this situation is additional “overclocking”. Increase your QoS bandwidths beyond the actual maximum speeds so that the end result after cpu slowdown is where you’d like it to be. For example, you might try setting your downlink speed to 300Mbps to achieve a real-world speed of 250Mbps. Again, lots of trial and error will be involved, but it can pay off.

Lastly (and this is outside of what most of this post is about), you can customize what types of network traffic are shaped within the portconfig and level7config files. Here are mine:

portconfig

101;red0;icmp;;;;;

102;red0;tcp;;;;53;

102;red0;tcp;;;;853;

102;red0;udp;;;;53;

102;red0;udp;;;;853;

103;red0;tcp;;;;443;

103;red0;tcp;;;;80;

104;red0;esp;;;;;

104;red0;tcp;;1194;;;

104;red0;tcp;;;;1194;

104;red0;udp;;1194;;;

104;red0;udp;;4500;;4500;

104;red0;udp;;500;;500;

104;red0;udp;;;;1194;

105;red0;tcp;;25;;;

105;red0;tcp;;465;;;

105;red0;tcp;;587;;;

105;red0;tcp;;;;25;

105;red0;tcp;;;;465;

105;red0;tcp;;;;587;

105;red0;udp;;25;;;

105;red0;udp;;465;;;

105;red0;udp;;587;;;

105;red0;udp;;;;25;

105;red0;udp;;;;465;

105;red0;udp;;;;587;

200;imq0;icmp;;;;;

202;imq0;tcp;;53;;;

202;imq0;tcp;;853;;;

202;imq0;udp;;53;;;

202;imq0;udp;;853;;;

203;imq0;tcp;;443;;;

203;imq0;tcp;;80;;;

204;imq0;esp;;;;;

204;imq0;tcp;;1194;;;

204;imq0;tcp;;;;1194;

204;imq0;udp;;1194;;;

204;imq0;udp;;4500;;4500;

204;imq0;udp;;500;;500;

204;imq0;udp;;;;1194;

205;imq0;tcp;;110;;;

205;imq0;tcp;;993;;;

205;imq0;tcp;;995;;;

205;imq0;tcp;;;;110;

205;imq0;tcp;;;;993;

205;imq0;tcp;;;;995;

205;imq0;udp;;110;;;

205;imq0;udp;;993;;;

205;imq0;udp;;995;;;

205;imq0;udp;;;;110;

205;imq0;udp;;;;993;

205;imq0;udp;;;;995;

level7config

102;red0;dns;;;

102;red0;rtp;;;

102;red0;skypetoskype;;;

103;red0;http;;;

103;red0;ssl;;;

104;red0;rdp;;;

104;red0;ssh;;;

104;red0;vnc;;;

105;red0;imap;;;

105;red0;smtp;;;

202;imq0;dns;;;

202;imq0;rtp;;;

202;imq0;skypetoskype;;;

203;imq0;http;;;

203;imq0;ssl;;;

204;imq0;rdp;;;

204;imq0;ssh;;;

204;imq0;vnc;;;

205;imq0;imap;;;

205;imq0;pop3;;;

Finally, here is a look at the UI page in total:

ipfire.home - QoS.pdf (416.9 KB)

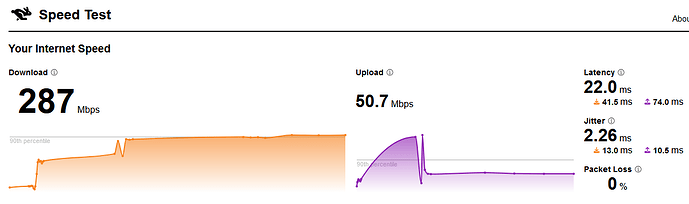

And here are speedtest results:

Speedtest.net (note the download and upload latencies being lower than idle latency)

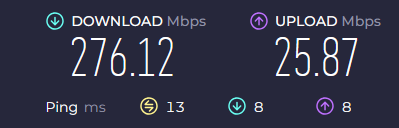

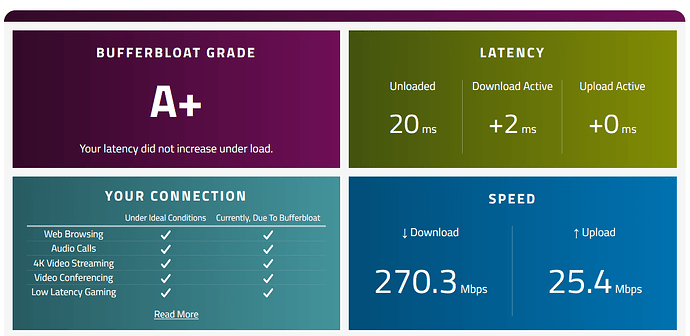

Cloudflare Speedtest:

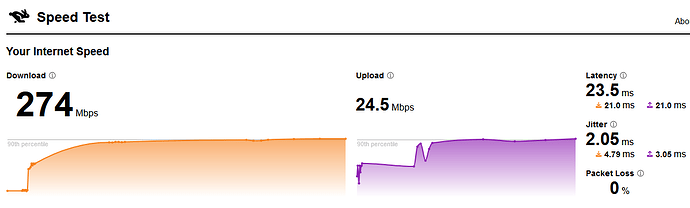

Waveform Bufferbloat:

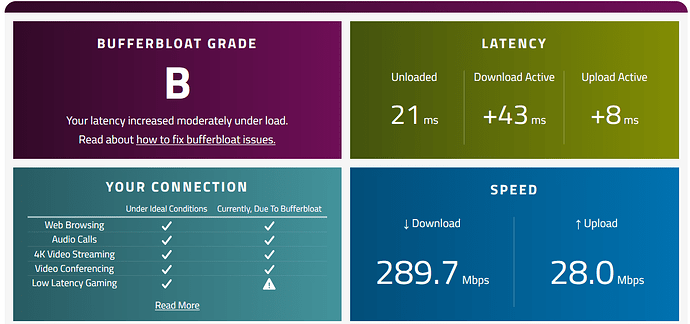

For comparison, here are speedtest results with QoS DISabled:

Cloudflare:

Waveform:

Note the increased latency during downloads and uploads. Also, in the Cloudflare result, the 50Mbps upload is mostly fake. Our ISP has a temporary speed that is quickly saturated, then drops back to ~29Mbps.