Hey everyone,

Here’s the scenerio.

1 GB Download (RX) with 40 MB Upload (TX)

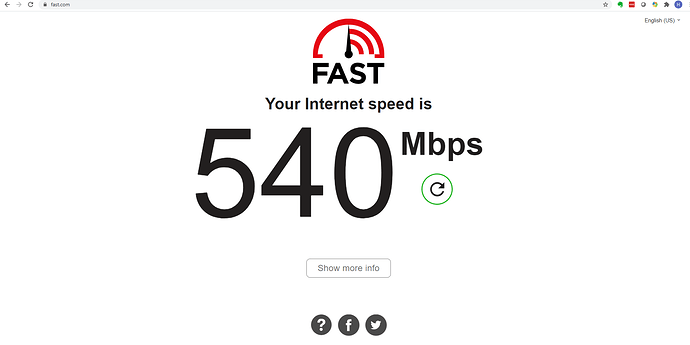

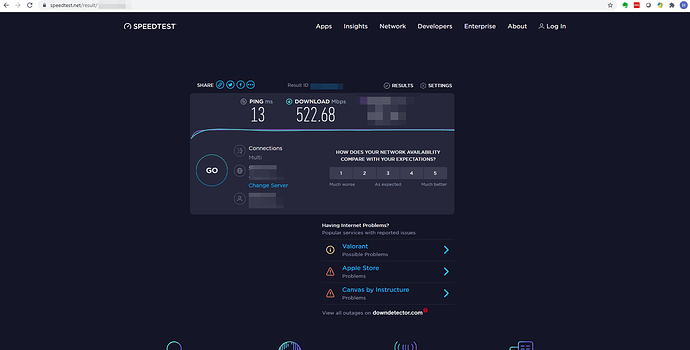

I relocated and took IPFires installation with me, as it was. It was super fast at my old location with bi-directional 1GB fiber, but where I am now, 1 GB download is what I am paying for. Since I have been here, 3 months, I have had 9 ISP techs onsite here, and am still am having a slight shortage of incoming bandwidth, BUT, at least it is floating around 800 MB at the wall plate, and modem. That seems to be good to go there, however, I was still getting a crap 250 MB download inside on my hardwired Red0 Interface.

I have been troubleshooting like a mad man, because it is interfering with a lot I have going on. With that said, I installed the speedtest cli tester in the IPFire Kernel with Pakfire and run it, still… 250 MB on the high side on download. I then put a 1 GB unmanaged switch between the ISP modem and the IPfire so I had an inline tap, and kept testing with the same results.

Finally I said, screw it, and pulled out the Red0 ingress cable (Cat-8) and plugged my computer directly into the Switch connected to the modem, then reset the modem. Low and behold I am getting 800-900 MB on the download consistently.

Keep in mind, I have had a solid IPFire instance for a while now, just going through the upgrades. I move, and then all of heck breaks loose with not being able to get the full bandwith capabilities on Red0 for some reason. I have yet to put my finger on what is causing it.

I read some articles and temporarily disabled offloading on my NIC - didn’t work.

I read more and completely shut off QOS because I seen complaints it was hacking folks bandwidth. - That also didn’t work.

I also tried disabling Auto-negotiation - Also didn’t work.

I also tried disabling TCP offloading on the network card interfaces (red, green, blue) - Nope, that didn’t work either.

I didn’t see the point the write the setting back to /etc/sysconfig/rc.local without being able to determine that there was a factual solution

This is a 4 port - Full Duplex capable network card, and it was just darn fast at my last location, but now… uggg…

Can you help me determine what the issue is please?

[root@ipfire /]# ethtool -k red0 > ~/red0settings

[root@ipfire ~]# cat red0settings

Features for red0:

rx-checksumming: on

tx-checksumming: on

tx-checksum-ipv4: off [fixed]

tx-checksum-ip-generic: on

tx-checksum-ipv6: off [fixed]

tx-checksum-fcoe-crc: off [fixed]

tx-checksum-sctp: on

scatter-gather: on

tx-scatter-gather: on

tx-scatter-gather-fraglist: off [fixed]

tcp-segmentation-offload: on

tx-tcp-segmentation: on

tx-tcp-ecn-segmentation: off [fixed]

tx-tcp-mangleid-segmentation: off

tx-tcp6-segmentation: on

udp-fragmentation-offload: off

generic-segmentation-offload: on

generic-receive-offload: on

large-receive-offload: off [fixed]

rx-vlan-offload: on

tx-vlan-offload: on

ntuple-filters: off

receive-hashing: on

highdma: on [fixed]

rx-vlan-filter: on [fixed]

vlan-challenged: off [fixed]

tx-lockless: off [fixed]

netns-local: off [fixed]

tx-gso-robust: off [fixed]

tx-fcoe-segmentation: off [fixed]

tx-gre-segmentation: on

tx-gre-csum-segmentation: on

tx-ipxip4-segmentation: on

tx-ipxip6-segmentation: on

tx-udp_tnl-segmentation: on

tx-udp_tnl-csum-segmentation: on

tx-gso-partial: on

tx-sctp-segmentation: off [fixed]

tx-esp-segmentation: off [fixed]

fcoe-mtu: off [fixed]

tx-nocache-copy: off

loopback: off [fixed]

rx-fcs: off [fixed]

rx-all: off

tx-vlan-stag-hw-insert: off [fixed]

rx-vlan-stag-hw-parse: off [fixed]

rx-vlan-stag-filter: off [fixed]

l2-fwd-offload: off [fixed]

hw-tc-offload: off [fixed]

esp-hw-offload: off [fixed]

esp-tx-csum-hw-offload: off [fixed]

rx-udp_tunnel-port-offload: off [fixed]

[root@ipfire ~]# nano turn_off_offline_on_nics

[root@ipfire /]# ethtool red0

Settings for red0:

Supported ports: [ TP ]

Supported link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Supported pause frame use: Symmetric

Supports auto-negotiation: Yes

Advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Advertised pause frame use: Symmetric

Advertised auto-negotiation: Yes

Speed: 1000Mb/s

Duplex: Full

Port: Twisted Pair

PHYAD: 1

Transceiver: internal

Auto-negotiation: on

MDI-X: on (auto)

Supports Wake-on: pumbg

Wake-on: g

Current message level: 0x00000007 (7)

drv probe link

Link detected: yes

[root@ipfire ~]# speedtest

Retrieving speedtest.net configuration…

Testing from Spectrum (My WAN IP Placeholder)…

Retrieving speedtest.net server list…

Selecting best server based on ping…

Hosted by ISP1 (Location 1) [92.06 km]: 31.701 ms

Testing download speed…

Download: 205.47 Mbit/s

Testing upload speed…

Upload: 37.59 Mbit/s

[root@ipfire ~]# speedtest

Retrieving speedtest.net configuration…

Testing from Spectrum (My WAN IP Placeholder)…

Retrieving speedtest.net server list…

Selecting best server based on ping…

Hosted by ISP2 (Location 2) [75.77 km]: 17.097 ms

Testing download speed…

Download: 228.72 Mbit/s

Testing upload speed…

Upload: 41.58 Mbit/s

In addition, I SSH’d in, ran setup, changed the red0 to a bogus static IP with Bogus gateway, saved settings, then went back in and put it back to DHCP and removed out the bogus information, saved again, and rebooted… Also… Didn’t work LOL

Can anyone point me into the right direction here? It can’t be this hard lol…

Also as an FYI, I rebooted the firewall and it cleared out my temp network card settings, so I could get another fresh run at this.

Lemme know!