Why does a (wrong) rule of 192.0.0.0/8 in the Location Block module, block access to IPFire from the INSIDE Green net?

I just thought that the Location Block examines and blocks traffic from Red to the firewall?

Hi,

yes, that’s what I thought as well. ![]() But man proposes, God disposes; due to a bug, the location filter has been active on any interface, thus causing the interference.

But man proposes, God disposes; due to a bug, the location filter has been active on any interface, thus causing the interference.

The commit mentioned above now restricts it to work on red0 only.

@ms: That should work for ppp0 (dial-up connections) as well, since the traffic appears on red0 for those systems, too - or am I missing something?

Thanks, and best regards,

Peter Müller

I did commit this code to my 150 release. This is not doing its job. It is still blocking my 172.16.40.0/24 segment. Only by allowing AU and EU i regain access to my host.

Hi,

welcome to the IPFire community and thanks for providing feedback.

Did you reload your firewall engine afterwards (/etc/init.d/firewall reload)?

Thanks, and best regards,

Peter Müller

I did a reload. I did a reboot. But i have to allow AU and EU to get it working with the present code.

Please check your current md5sum of my file against your file to make sure i applied the right commit

[root@ipfire firewall]# md5sum ./rules.pl

0f1a242f7ac26e176e1e689265bae38a ./rules.pl

Here Git - missing GLIBC Michael say the problems are fixed. Could we use the filter now? Can someone write a blogpost about the state please.

I am AU based and my Protectlii ipfire also succumbed to the same issue. I have all countries blocked and was totally locked out of ipfire.

Got caught up trying to get a connection with a serial cable but put that on hold when I realised I would need to purchase a serial to USB cable due to my desktop not having a serial port.

I then attempted a restore from my 149 backup and this in the end worked OK.

After a successful restore I then had to manually restart the DHCP service and re install the Guardian add-on. I did a reboot of the ipfire just to make sure the services were all active.

I’m OCD when it comes to updates so I disabled location blocking and then then reapplied 150.

Once again I’m shown the value of running regular backups across all of my systems and data.

Thanks to all who contributed to this topic by posting their troubleshooting and resolution steps on this issue.

Technical issues will always occur on the likes of an ipfire. These are given to us as opportunities to delve deeper, learn and improve our contingency planning.

Despite this glitch I remain grateful to the team for giving us the excellent product that ipfire is.

Hi,

…“for the records” and for everyone who runs into this problem with Core 150 - perhaps it helps:

Despite upgrading to Core 150 a few days ago, nothing was blocked. I waited.

Yesterday morning, 8:00, I suddenly couldn’t get any access to the GUI or via SSH. ‘ping’ was OK, but that was all. I had apparently joined the bug. Database update!? Ok, lets see.

What I did (memory protocol!):

Since there is no keyboard or monitor down in the cellar where my box is standing, I had to take her upstairs with me.

I attached monitor, keyboard and GREEN only. No RED available here…

Disabled Geo-IP-Blocking by editing /var/ipfire/firewall/locationblock, changing LOCATIONBLOCK_ENABLED=on

to

LOCATIONBLOCK_ENABLED=off.

Added the fix from:

https://git.ipfire.org/?p=ipfire-2.x.git;a=commitdiff;h=c69c820025c21713cdb77eae3dd4fa61ca71b5fb to ./usr/lib/firewall/rules.pl

Rebooted. Access to GUI was OK. Hm.

I brought the box back in the cellar and attached GREEN and RED.

After a restart, GUI was still OK, but DNS and DNSSEC were completely broken.

I opened https://[GREEN_IPFIRE_IP]:444/cgi-bin/location-block.cgi (Enable Location based blocking: was unchecked as intended), changed NOTHING, just clicked on SAVE and reloaded all rules on https://[GREEN_IPFIRE_IP]:444/cgi-bin/firewall.cgi.

Then I activated Geo-IP-Blocking again and its still running OK.

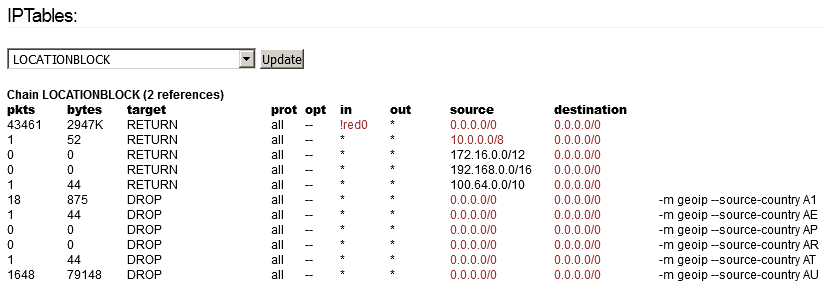

IPTables / LOCATIONBLOCK on https://[GREEN_IPFIRE_IP]/cgi-bin/iptables.cgi have to look like this:

HTH,

Matthias

Only can edit /var/ipfire/firewall/locationblock file and change “on” to “off” and restart firewall with /etc/init.d/firewall restart, everything goes back to normal. I change it connecting one monitor and keyboard.

That’s the solution until there’s a patch.

To explain this patch

The first iptables rule excludes local networks from being analysed by location-filter.

The second block of rules excludes private networks, which can exist on RED from the filter.

Thus the inconsistancy in the libloc tables is circumvented.

You can see this very clear in the post of Matthias Fischer above.

Hope this clarifies the case a bit, also the fact that only a part of systems is affected.

FYI I have edited my fw rules.pl with the above changes, enabled GeoIP and applied fw change.

All working again as desired, after reboot too, to be sure. Well done on the quick fix.

One thing the location snafu has shown is the importance of running code only where required (i.e. Red interface in this case). Local addresses in database obviously a different matter. Location blocking should be rock solid from now on.

I’m currently on the road (vacation) and had previously only disabled EU and DE in the location filter. It worked for 1 day but now I can’t access my Nextcloud or via VPN. It will probably be blocked by the error again.

Well, the damage is done.

Now we have to think about a mechanism so that it does not happen again.

Before publishing the databases of the countries, it could be done a filter to detect / eliminate these errors.

I have several remote machines without access and explain the error to the end customer / distributor. You totally lose credibility. Besides being confiable, they have to be reliable.

This is a brutal denial of services as the connectivity of the machine is totally lost.

I do not want this to be taken as a negative review, but a comment to improve IPFire.

Thank you all.

This error could have been found by using the testing tree in systems that have private IPs as RED IP.

Just my opinion.

The source of the error is another story.

Hi all,

apart from exporting the networks in a way xt_geoip can handle overlaps safely, there also seem to be a few issues with dummy RIR data or records transferred from ARIN after establishing Regional Internet Registries.

We are working on detecting and excluding them while building the location database without loosing too much performance (string comparisons are involved, and it would be nice if we could work around regular expressions). So please continue holding the line…

Thanks, and best regards,

Peter Müller

Hi,

apart from exporting the networks in a way xt_geoip can handle overlaps safely, there also seem to be a few issues with dummy RIR data or records transferred from ARIN after establishing Regional Internet Registries.

safeguards against the latter are now implemented (by using regular expressions, grmpf ![]() ). APNIC still writes lots of

). APNIC still writes lots of /8 network chunks into the database, but since they own those, it is technically correct to do so.

Some aspects regarding xt_geoip export are still to be clarified before I can submit a patchset to the location mailing list and we go into QA.

Thanks, and best regards,

Peter Müller

Hi,

a patchset for sanitising RIR data (which fixes bugs #12500 and #12501) has been sent to the location mailing list. Although it did not show up any quirks on our Q/A system, I would be grateful if some of you could have a look at it and report anything suspicious here or on the mailing list (preferred).

At the moment, we struggle with bug #12506, but once this is solved, I am confident of having a fixed version rolled out with upcoming Core Update 151, unforeseen incidents not taken into account.

Thanks, and best regards,

Peter Müler

Hi all,

meanwhile, @ms solved bug #12506 so we can generate and publish new location databases again.

The - at the time of writing - most recent one does not break any leftover Core Update 150 systems with the location filter enabled, as we reverted some changes introduced to make the database more accurate, but triggering the combination of bugs mentioned earlier.

This still leaves us with finding an elegant way of introduce the more accurate database again without breaking anything. Please refer to this thread on both development and location mailing lists for further information.

Apart from that, thanks again for your understanding and patience. There seems to be a light at the end of the tunnel, and this time we believe it’s not a train.

Thanks, and best regards,

Peter Müller

Dear Peter,

thanks fro the info. is there anything we can do while we wait for Core 151?

Or it’s better to wait for it?

Best regards

UT1

Hi,

from my point of view, there is nothing to do apart from installing Core Update 151 as soon as we have released it (personal ETA: next week, but that is only a speculation ![]() ).

).

Your system will fetch new location databases with Core Update 150 as well, but they are not as accurate as they could be. It will probably take some time (Core Update 152/153) until we can finally publish accurate databases.

Thanks, and best regards,

Peter Müller