I’m sure micro codes have changed on some of cpu architecture an not changed on others. Do to security faults.

And age of architecture some may remain the same ( to old to bother patching ).

How did these patches effect newer " patched " cpu’s vs unpatched cpu’s?

I don’t think even that situation would apply because the J3160 has a turbo frequency of 2.2Ghz, which puts it 22% higher on clock speeds. The D525 has no turbo frequency.

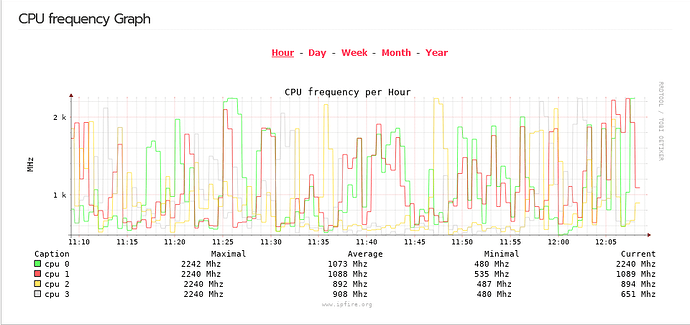

And turbo frequency is used often on my IPFire:

I don’t think that we have added more “load” since then. What is probably very noticeable are the Spectre/Meltdown mitigations that have been rolled in IPFire and Intel’s microcode. The D525 will be less affected than more modern processors, so there might be a huge impact.

You are looking at computational performance. That may very well be, and nobody is doubting that. However, the QoS is not very CPU-intensive. What I was talking about is this:

git.ipfire.org Git - thirdparty/kernel/linux.git/blob - Documentation/memory-barriers.txt

It is about how to synchronise a counter from one CPU core to another. The incrementation of one is a task that both processors perform very fast.

In my tests further up this thread, ksoftirqd was approaching 100% cpu usage as I increased the speed in QoS from 50Mbps to 300Mbps. My ISP plan is for 300Mbps. I really don’t understand the relationship between ksoftirqd and QoS bandwidth, but this is what the linux man pages say about it:

ksoftirqd is a per-cpu kernel thread that runs when the machine is under heavy soft-interrupt load. Soft interrupts are normally serviced on return from a hard interrupt, but it’s possible for soft interrupts to be triggered more quickly than they can be serviced. If a soft interrupt is triggered for a second time while soft interrupts are being handled, the ksoftirq daemon is triggered to handle the soft interrupts in process context. If ksoftirqd is taking more than a tiny percentage of CPU time, this indicates the machine is under heavy soft interrupt load.

I’d like to understand what is happening when ksoftirqd climbs towards 100% usage as I increase the allowed bandwidth in QoS.

So this is coming from your network interface. Every time it receives a packet it creates an interrupt, telling the OS that there is data ready to be received. If the CPU cannot keep up, the kernel might delay interrupt handling and come back later which is being handled in ksoftirqd.

If you only have one single ksoftirqd using 100% CPU that means that your network adapter is not able to load-balance over more than one processor. That means that your system is limited to the single-core CPU performance of one core. Usually that even is the first CPU which also has to handle lots of other tasks that cannot easily be distributed around multiple cores. Ideally you should see all CPUs having similar load.

Would irqbalance help with the interrupts maxing out a single thread by spreading them out amongst the available threads/cores?

Could this sort of thing be related.

Protectcli do not share which Intel adapter is used.

Pulled that from number 7 above.

If the hardware doesn’t support it, no. Normally irqbalance makes things worse because it tries to do funny things.

No, there are multiple instances of ksoftirqd that are sharing the load. But I am watching htop in real-time so I cannot tell with accuracy how much cpu each ksoftirqd is using. So I am just watching the top line in htop and reporting the highest cpu usage I see reported by that particular instance of ksoftirqd. I can see the other three instances (being a 4 core cpu) bouncing up and down the htop list, I just can’t mentally keep track of how much cpu each of the other three are using.

Edit: if you scroll up to post 24 and look at the 2nd screenshot, you will see an example. ksoftirqd/1 is using 53.3%; ksoftirqd/2 is using 24.8%; ksoftirqd/0 is using 0.8% and ksoftirqd/3 is not showing, so using 0.0%. But this is just a moment in time during a speed test. I cannot say for sure how much cpu on average is being used by each instance.

That strongly suggests that your NICs can balance the load across two processors.

I think the bottleneck is now “Cake” I have done some Tests with the IPFire ECO and they can only Route 190mBIT with enabled QoS.

I have switched back to fq_codel and it can hande 650mBit

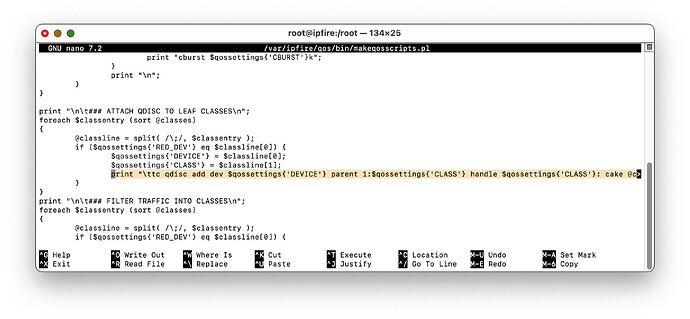

Please test by editing /var/ipfire/bin/makeqosscripts.pl

--- makeqosscripts.pl.org 2024-04-30 09:33:20.626373902 +0200

+++ makeqosscripts.pl 2024-05-01 10:50:15.897666665 +0200

@@ -209,7 +209,7 @@

if ($qossettings{'RED_DEV'} eq $classline[0]) {

$qossettings{'DEVICE'} = $classline[0];

$qossettings{'CLASS'} = $classline[1];

- print "\ttc qdisc add dev $qossettings{'DEVICE'} parent 1:$qossettings{'CLASS'} handle $qossettings{'CLASS'}: cake @cake_options\n";

+ print "\ttc qdisc add dev $qossettings{'DEVICE'} parent 1:$qossettings{'CLASS'} handle $qossettings{'CLASS'}: fq_codel limit 10240 quantum 1514\n";

}

}

print "\n\t### FILTER TRAFFIC INTO CLASSES\n";

@@ -380,7 +380,7 @@

if ($qossettings{'IMQ_DEV'} eq $classline[0]) {

$qossettings{'DEVICE'} = $classline[0];

$qossettings{'CLASS'} = $classline[1];

- print "\ttc qdisc add dev $qossettings{'DEVICE'} parent 2:$qossettings{'CLASS'} handle $qossettings{'CLASS'}: cake @cake_options\n";

+ print "\ttc qdisc add dev $qossettings{'DEVICE'} parent 2:$qossettings{'CLASS'} handle $qossettings{'CLASS'}: fq_codel limit 10240 quantum 1514\n";

}

}

print "\n\t### FILTER TRAFFIC INTO CLASSES\n";After this change disable and reenable QoS to regenerate the script.

Thanks, @arne_f ! That exactly where my hardware topped out with QoS. I may not be able to test til this weekend, but I will confirm if my hardware behaves the same.

PoC question: use of Cake or fq_codel for QoS can be translated into a toggle into Web UI?

Any indication if it is 1) an inherent limitation of Cake, or 2) related to how it was implemented in IPFire?

might also be the third option

3) sub optimal execution with this hardware (NICs, APU).

Not all silicon is equal to other ones.

Yes. It could be Cake outperforms fq_codel on certain hardware, while fq_codel wins on other hardware.

I attempted to follow your instructions to switch to fq_codel, but backed out. First, I backed up and downloaded IPFire backup files. Then I stopped QoS. Then I SSH’d in but could not find a bin directory under /var/ipfire. I ended up finding makeqosscripts.pl at

/var/ipfire/qos/bin

not at

/var/ipfire/bin

When I edited makeqosscripts.pl in nano, it was huge, many times longer than the code you posted. I was unsure if I should replace the entire file contents with the contents you posted, or insert the code you posted somewhere in the file.

If you can post more complete instructions for someone who does not work in the command line all the time, I’ll give it another try.

Thanks.

This! You are finding two different lines (with the -) and replacing them (with the +)

Here are detailed instructions

Edit:

nano /var/ipfire/qos/bin/makeqosscripts.pl

Do Where Is by entering ^W (also known as CTRL-W).

Find the first - line (do not include the - ):

print "\ttc qdisc add dev $qossettings{'DEVICE'} parent 1:$qossettings{'CLASS'} handle $qossettings{'CLASS'}: cake @cake_options\n";

and you will see this:

replace that entire line with the first + (do not include the +):

print "\ttc qdisc add dev $qossettings{'DEVICE'} parent 1:$qossettings{'CLASS'} handle $qossettings{'CLASS'}: fq_codel limit 10240 quantum 1514\n";

repeat for 2nd set.